Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

How We Boosted Video Processing Speed 5x by Optimizing GPU Usage in Python | by Lightricks Tech Blog | Medium

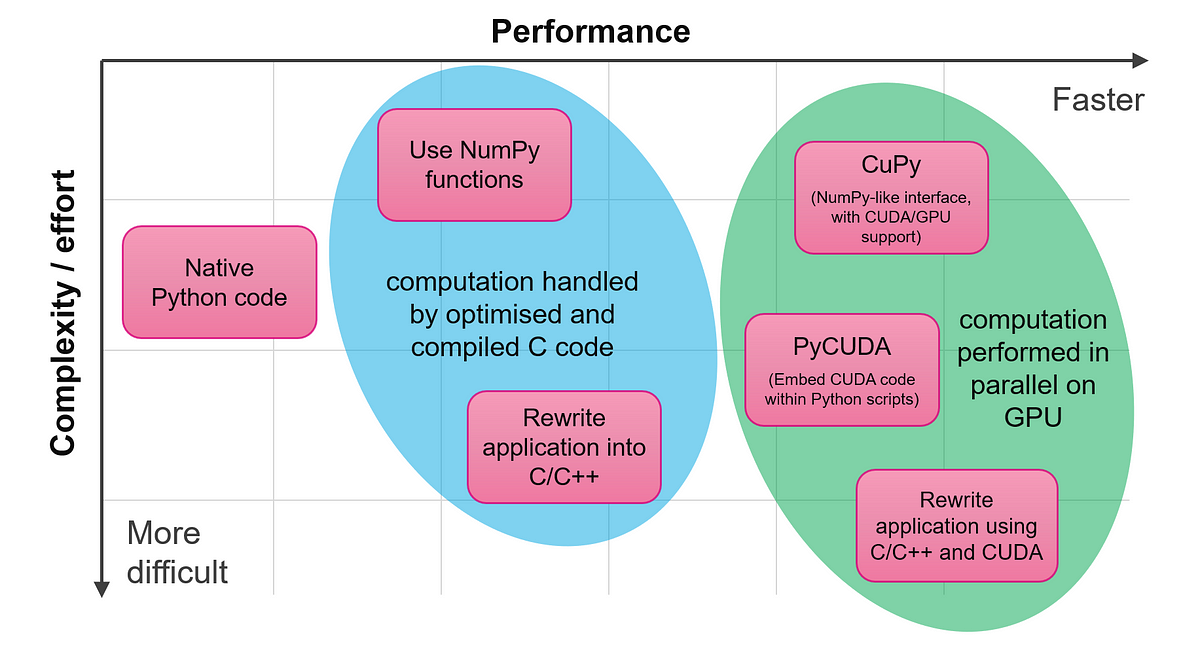

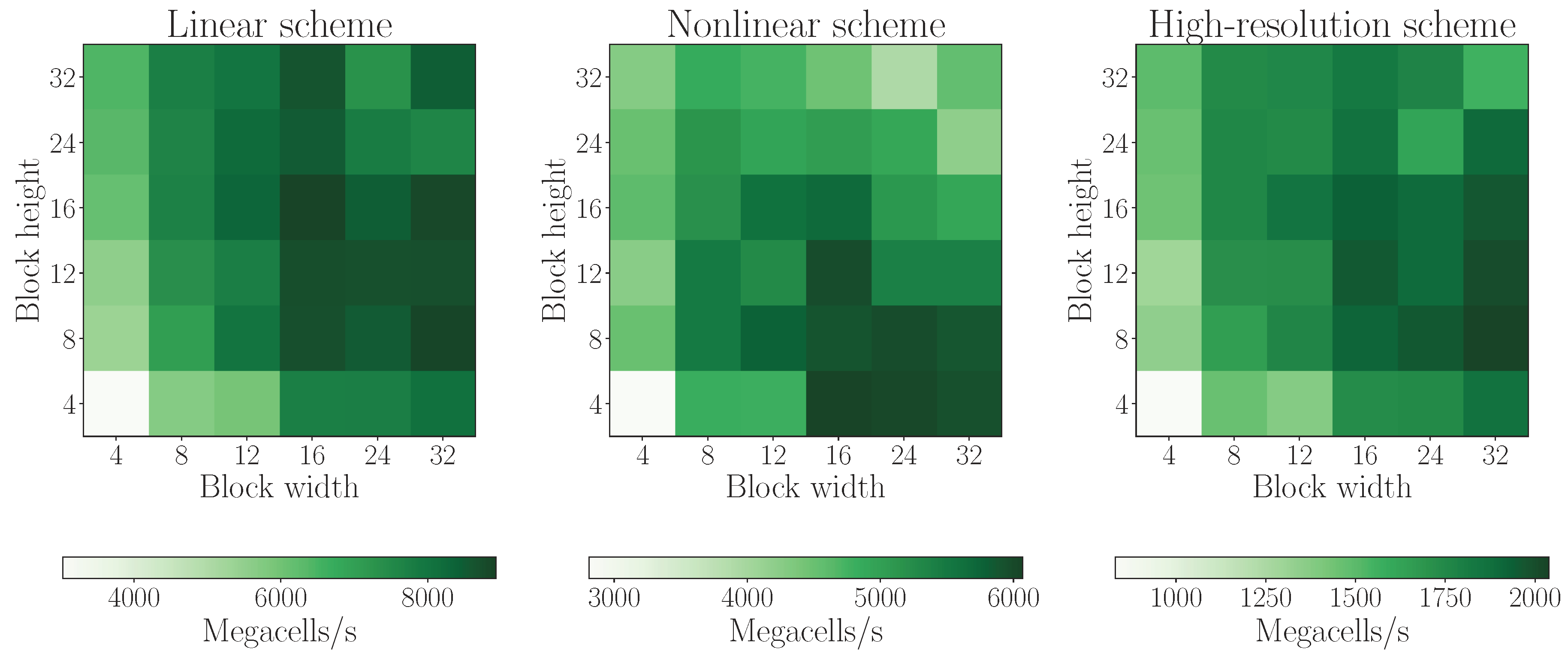

Computation | Free Full-Text | GPU Computing with Python: Performance, Energy Efficiency and Usability

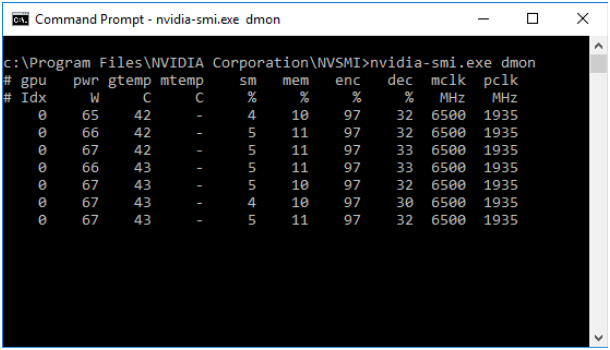

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Amazon.com: Hands-On GPU Computing with Python: Explore the capabilities of GPUs for solving high performance computational problems: 9781789341072: Bandyopadhyay, Avimanyu: Books

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

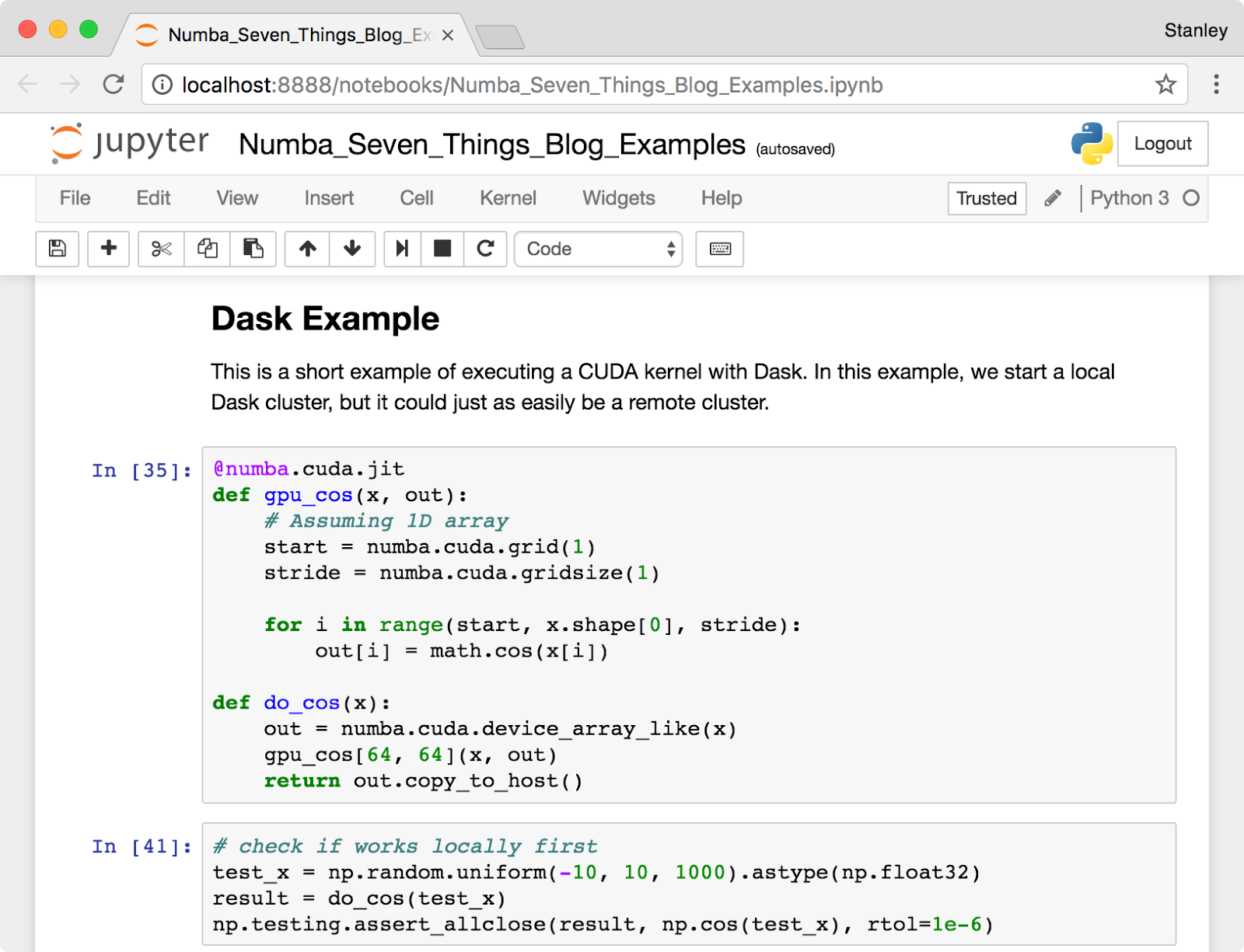

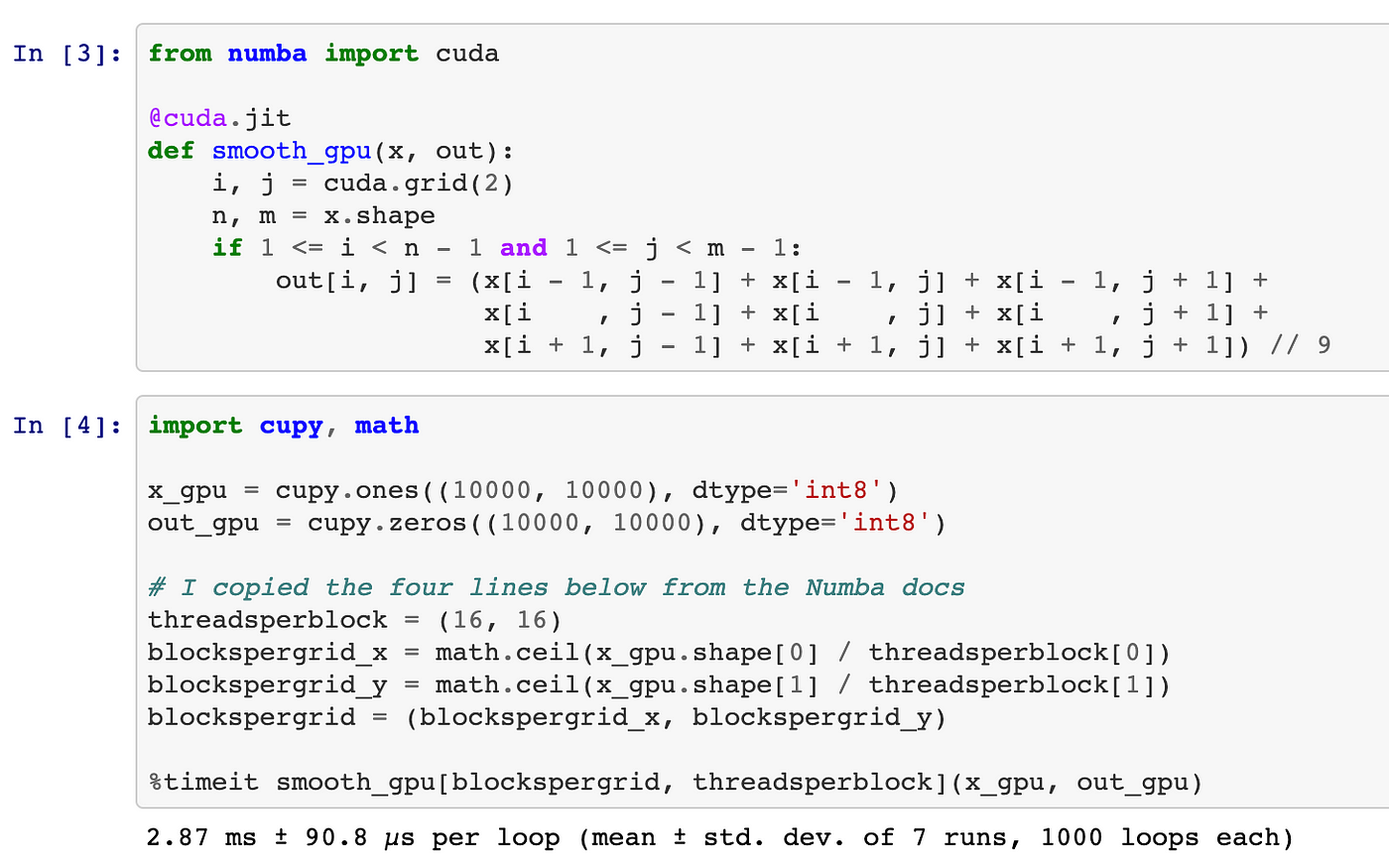

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science